OpenAI and the road to text-guided image generation: DALL·E, CLIP, GLIDE, DALL·E 2 (unCLIP) | by Grigory Sapunov | Intento

Akridata Announces Integration of Open AI's CLIP Technology to Deliver an Enhanced Text to Image Experience for Data Scientists and Data Curation Teams

Makeshift CLIP vision for GPT-4, image-to-language > GPT-4 prompting Shap-E vs. Shap-E image-to-3D - API - OpenAI Developer Forum

Makeshift CLIP vision for GPT-4, image-to-language > GPT-4 prompting Shap-E vs. Shap-E image-to-3D - API - OpenAI Developer Forum

Simon Willison on X: "Here's the interactive demo I built demonstrating OpenAI's CLIP model running in a browser - CLIP can be used to compare text and images and generate a similarity

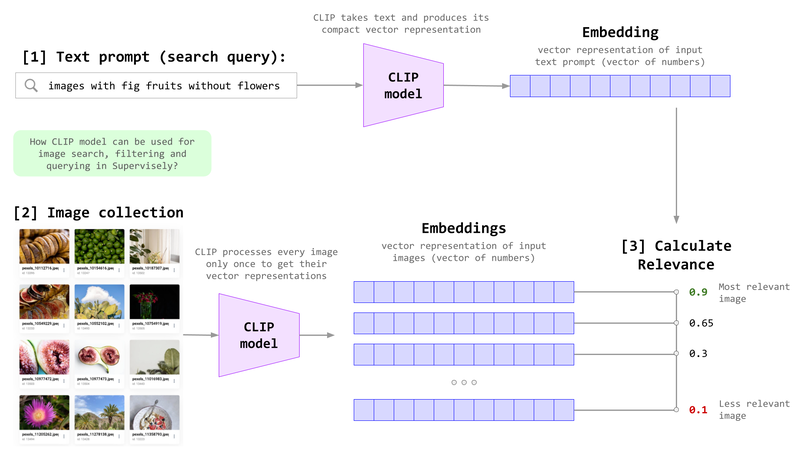

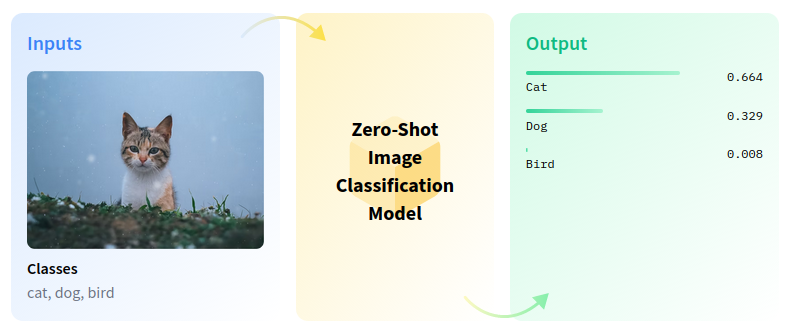

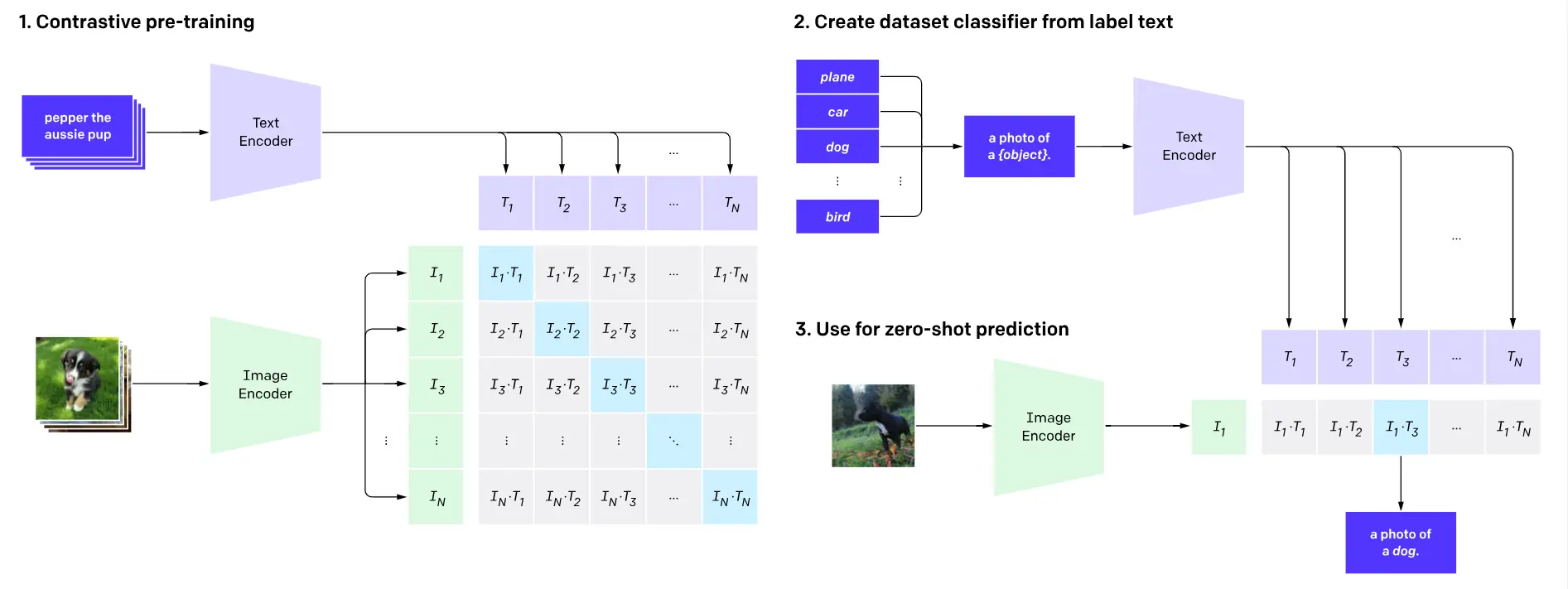

Zero-shot Image Classification with OpenAI CLIP and OpenVINO™ — OpenVINO™ documentationCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to ...

![P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search](https://external-preview.redd.it/W9YcFgBnfZDMlabAtrfk4CNq8IjFz7gmrlOz2NkSIKs.png?format=pjpg&auto=webp&s=7617eef5cbad7a9c0399650933d416ae43c14740)

P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search

![P] OpenAI CLIP: Connecting Text and Images Gradio web demo : r/MachineLearning P] OpenAI CLIP: Connecting Text and Images Gradio web demo : r/MachineLearning](https://external-preview.redd.it/wL9RVy2fh170CMOokERKYZ_2Xw5fz6G5U-CaGMLA7rU.jpg?auto=webp&s=9ad567ec8e7502aedee90db04008811e8c4fad36)

![P] OpenAI CLIP: Connecting Text and Images Gradio web demo : r/MachineLearning P] OpenAI CLIP: Connecting Text and Images Gradio web demo : r/MachineLearning](https://preview.redd.it/qstepjl4o6q61.jpg?vthumb=1&s=243f652b639e962e47959f166efabc3d083cf514)

.png)