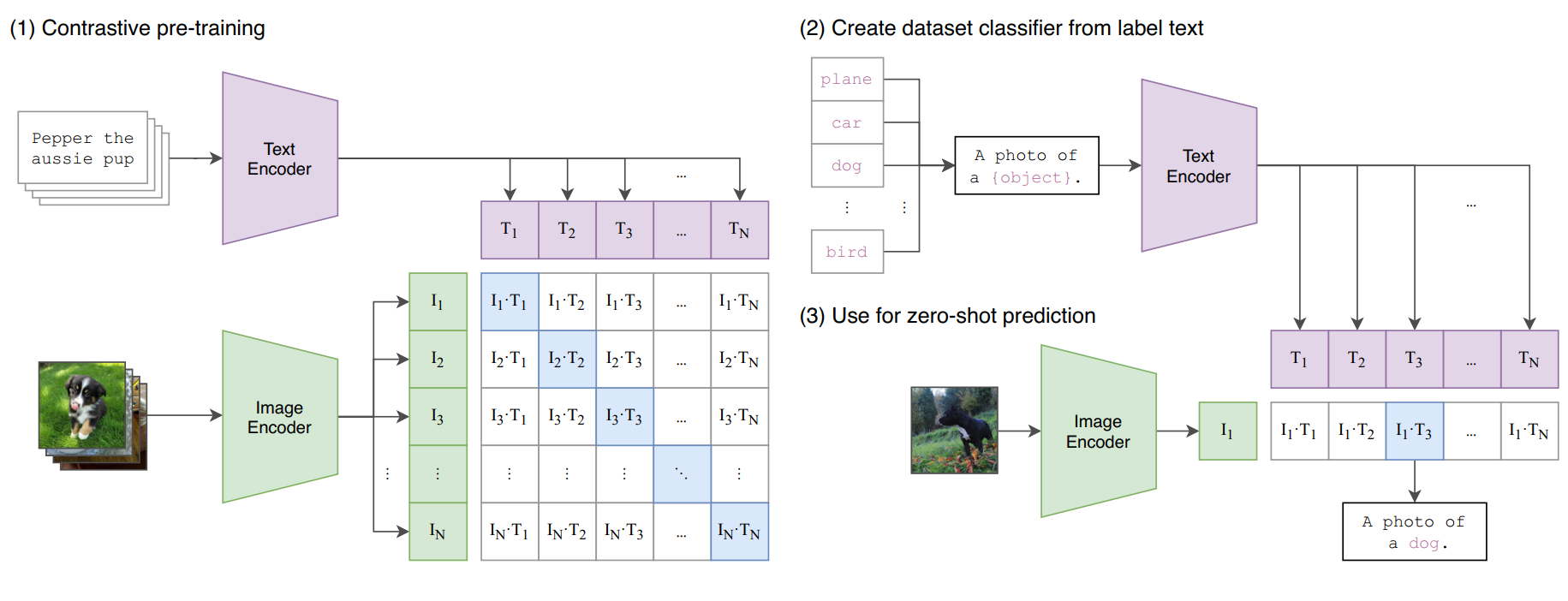

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

Contrastive Language–Image Pre-training (CLIP)-Connecting Text to Image | by Sthanikam Santhosh | Medium

GitHub - huggingface/pytorch-image-models: PyTorch image models, scripts, pretrained weights -- ResNet, ResNeXT, EfficientNet, NFNet, Vision Transformer (ViT), MobileNet-V3/V2, RegNet, DPN, CSPNet, Swin Transformer, MaxViT, CoAtNet, ConvNeXt, and more

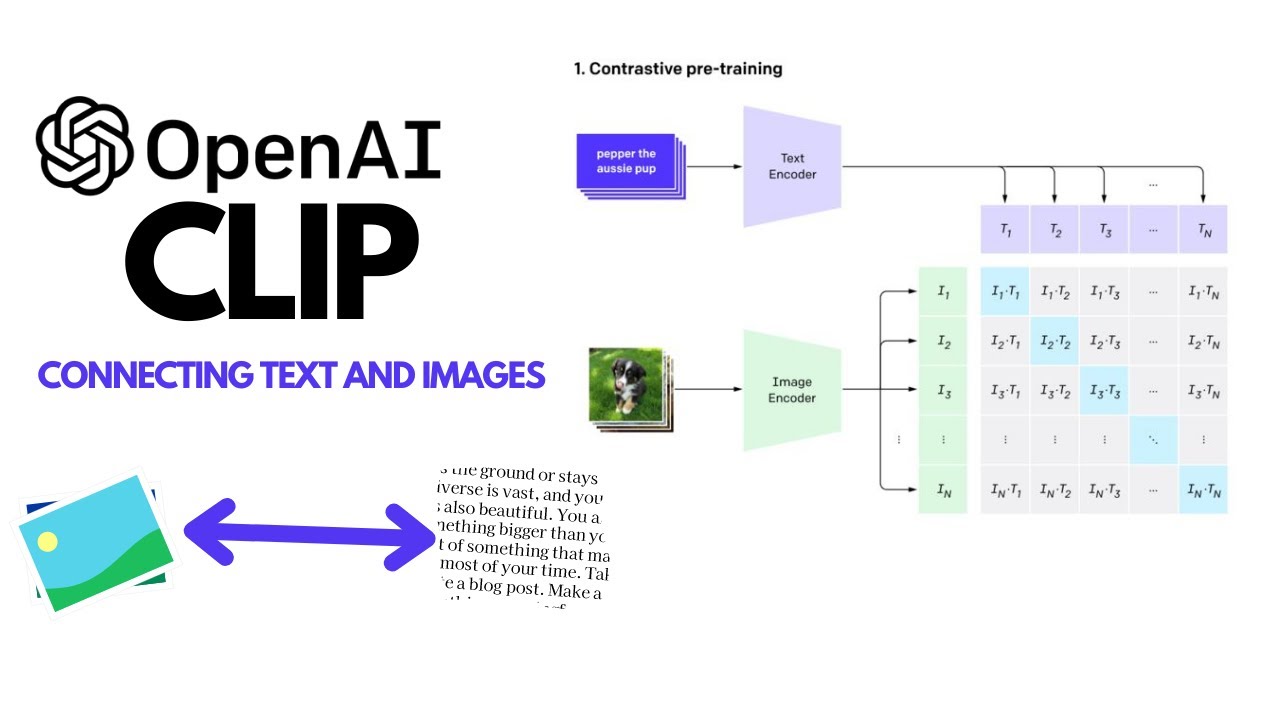

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube

Explaining the code of the popular text-to-image algorithm (VQGAN+CLIP in PyTorch) | by Alexa Steinbrück | Medium

Implement unified text and image search with a CLIP model using Amazon SageMaker and Amazon OpenSearch Service | AWS Machine Learning Blog

Mastering the Huggingface CLIP Model: How to Extract Embeddings and Calculate Similarity for Text and Images | Code and Life

![P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search](https://external-preview.redd.it/W9YcFgBnfZDMlabAtrfk4CNq8IjFz7gmrlOz2NkSIKs.png?format=pjpg&auto=webp&s=7617eef5cbad7a9c0399650933d416ae43c14740)