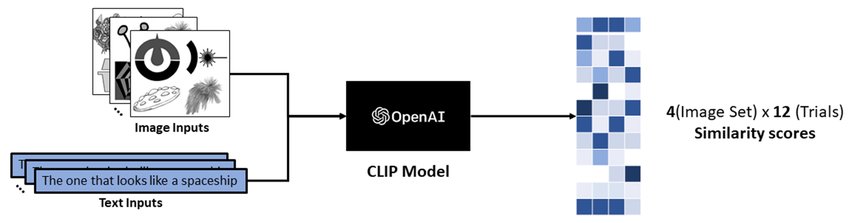

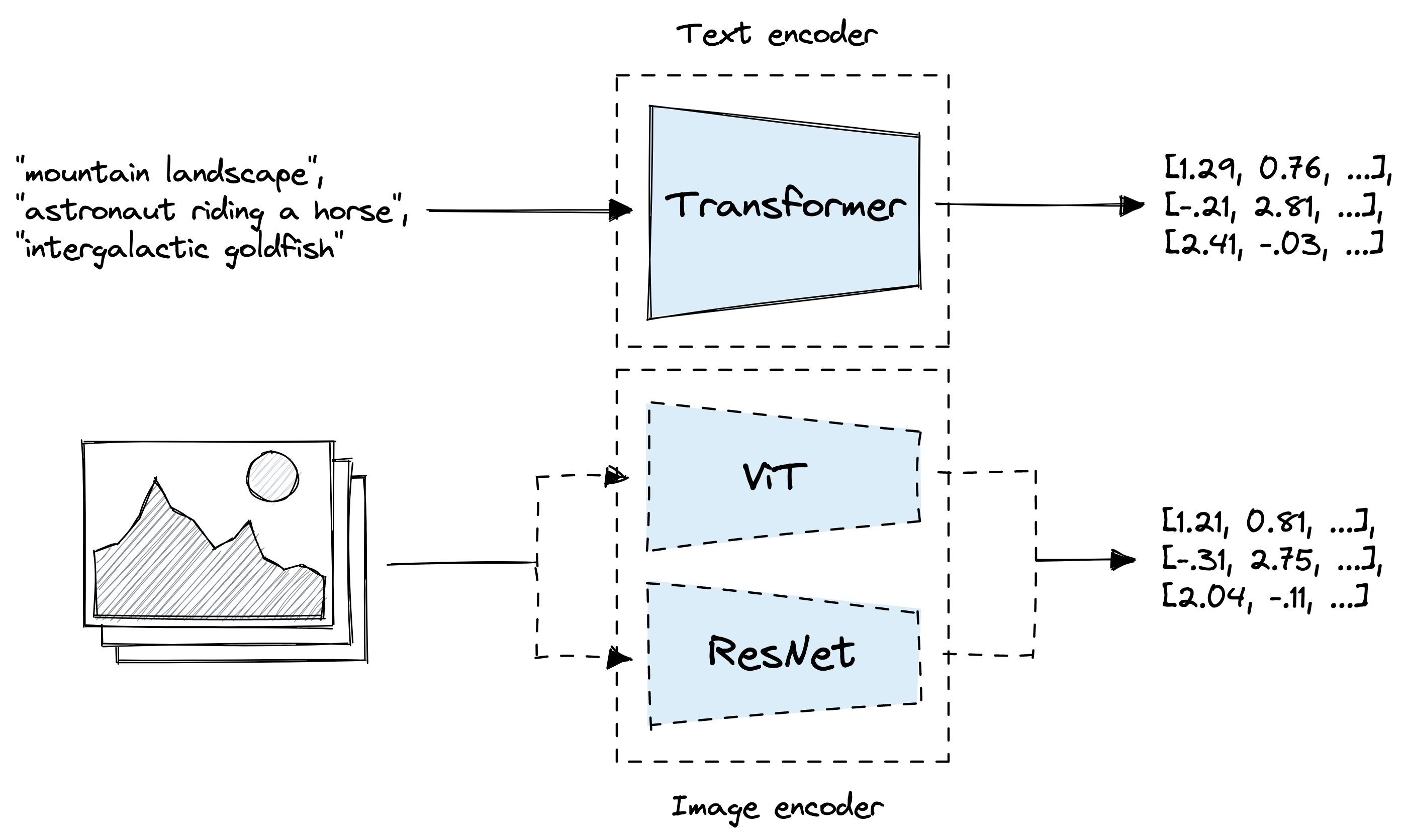

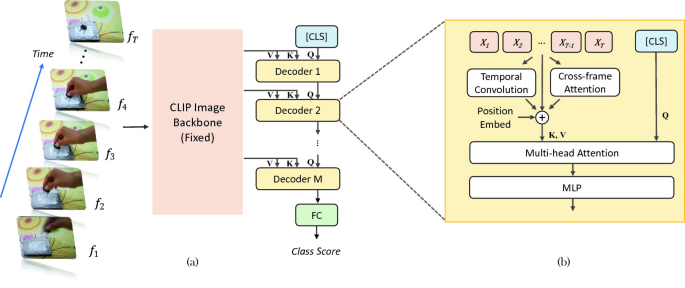

Process diagram of the CLIP model for our task. This figure is created... | Download Scientific Diagram

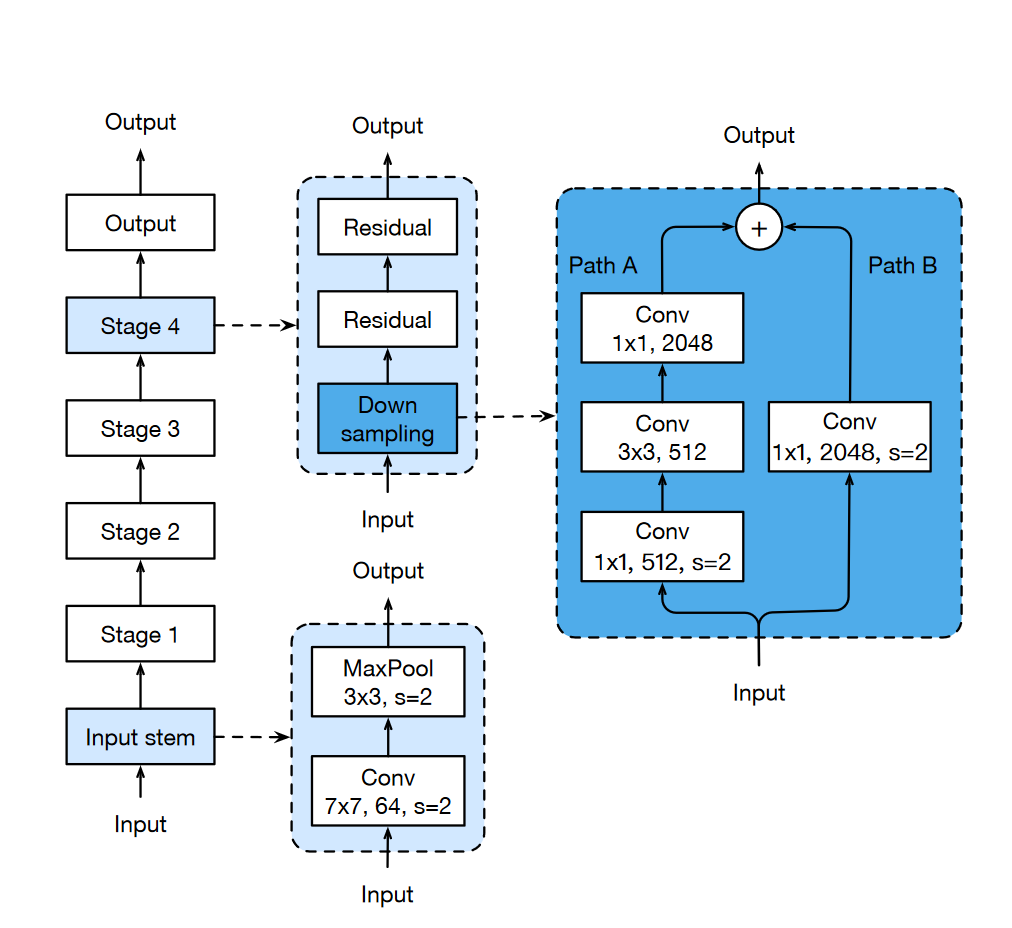

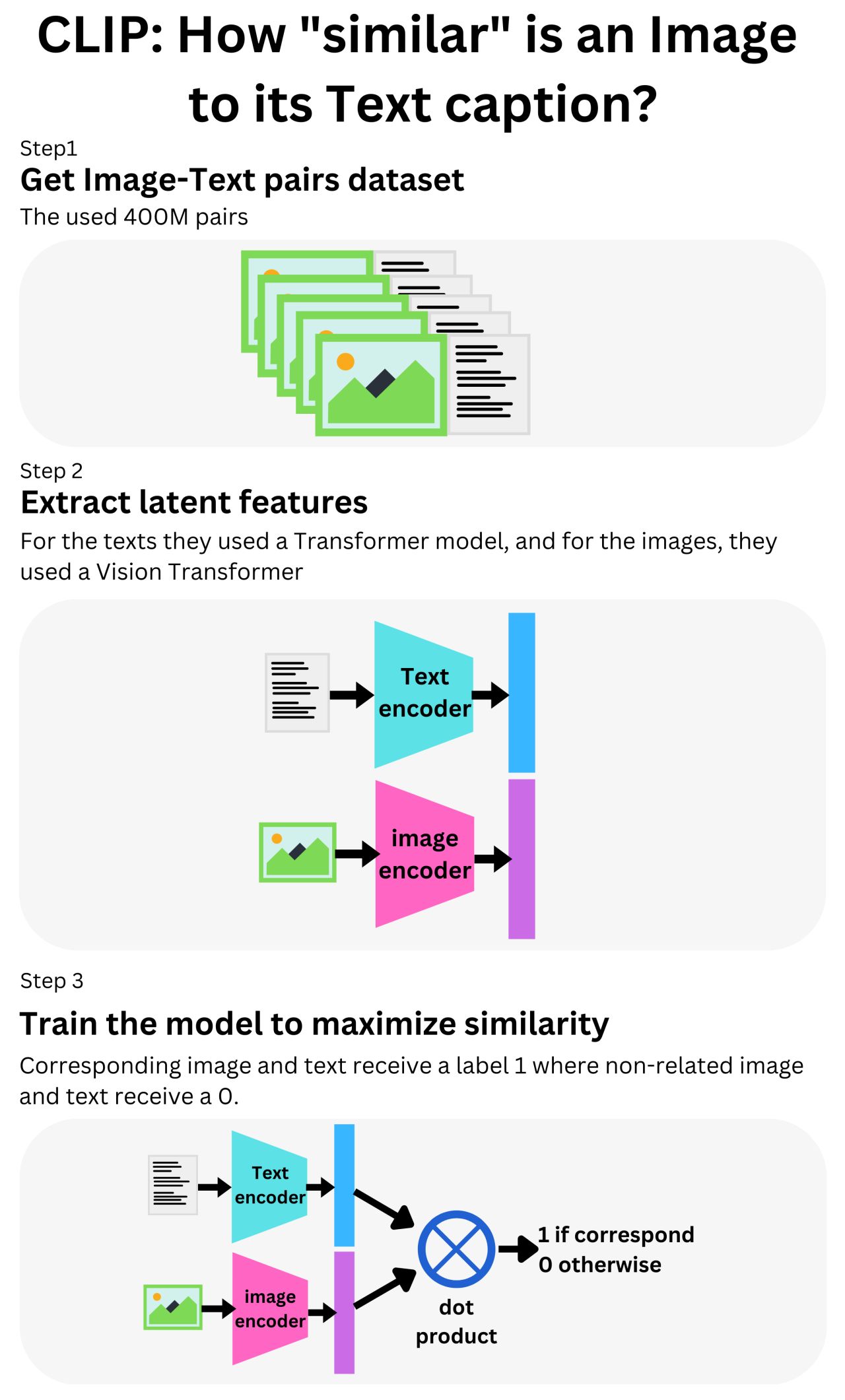

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

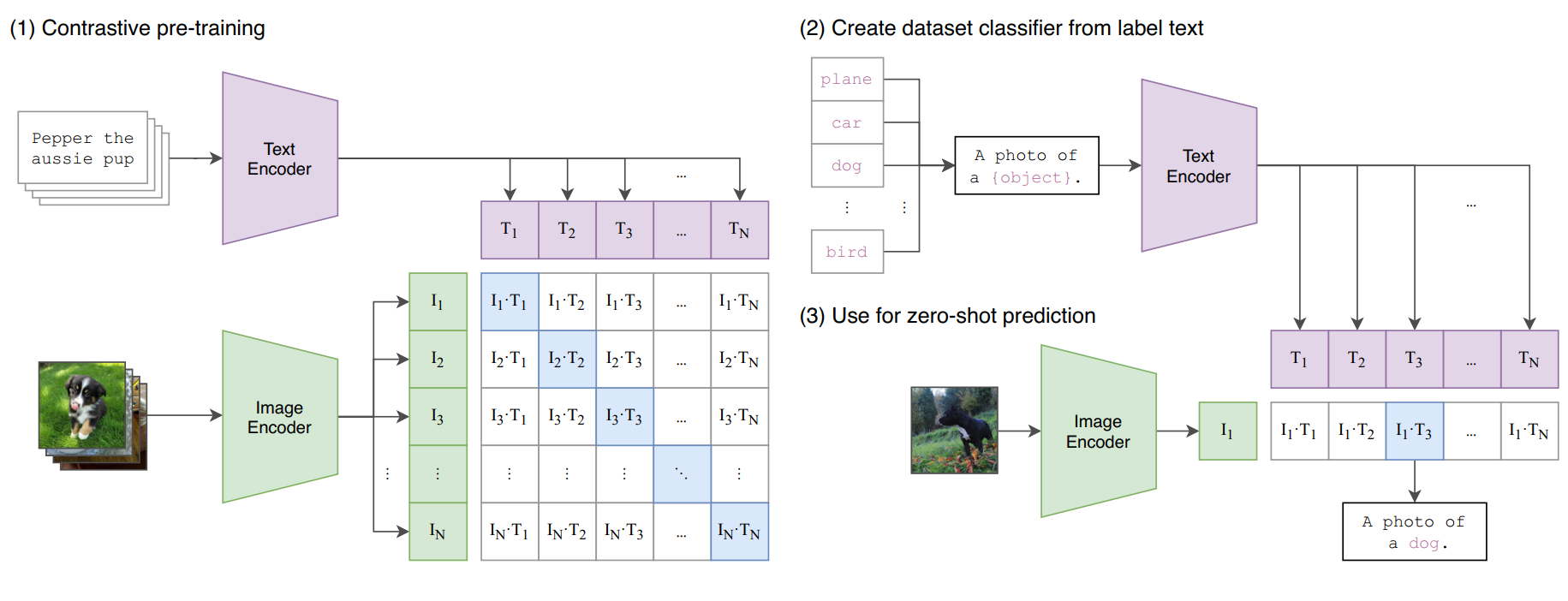

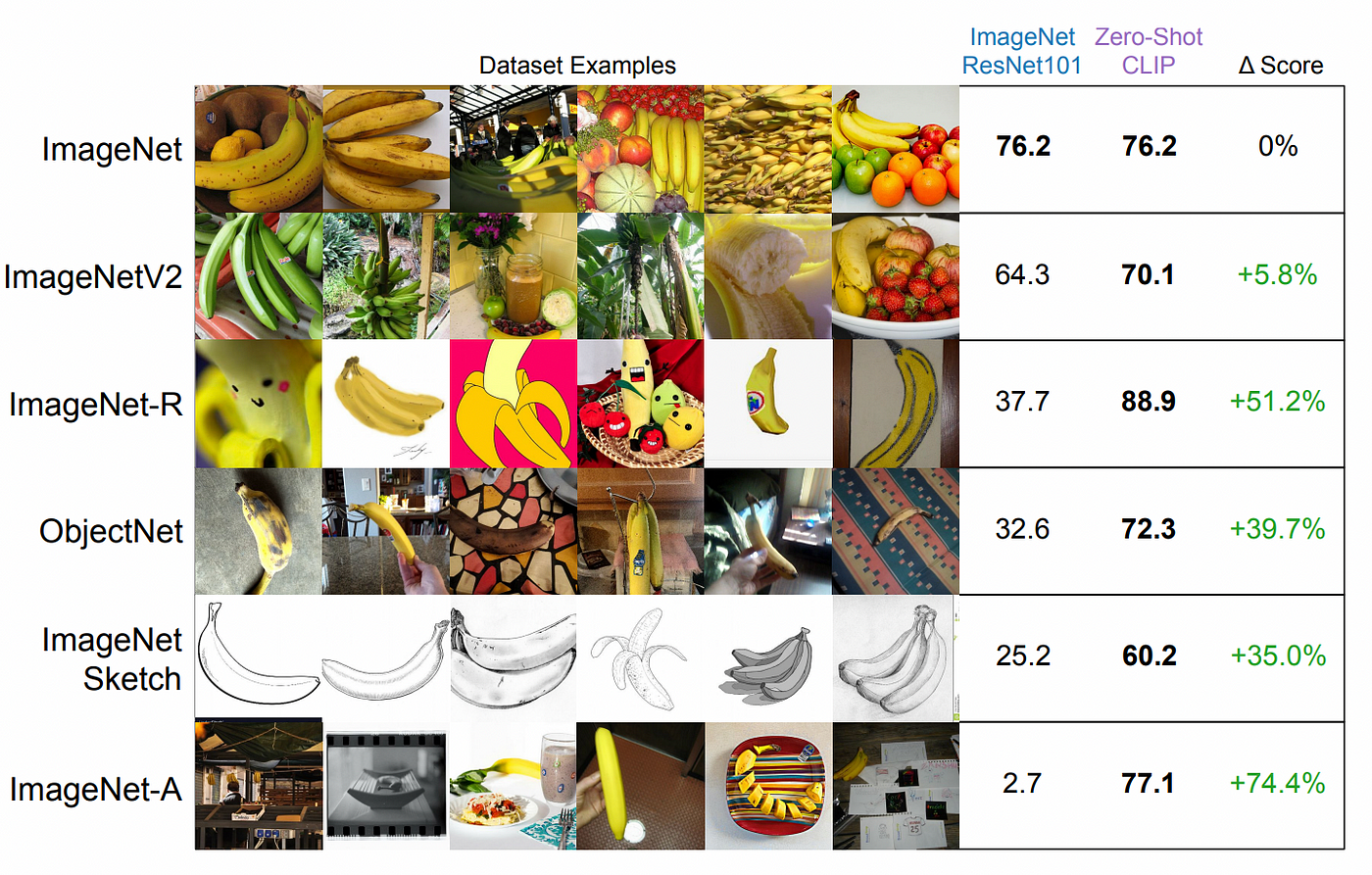

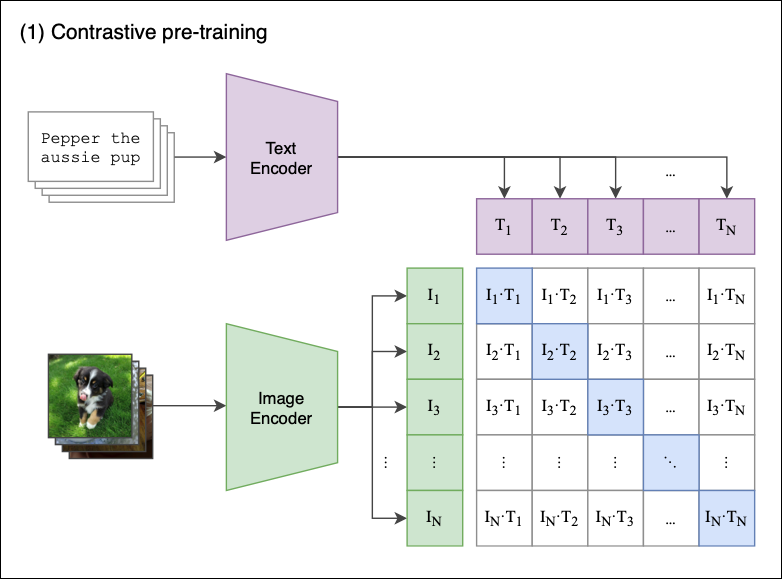

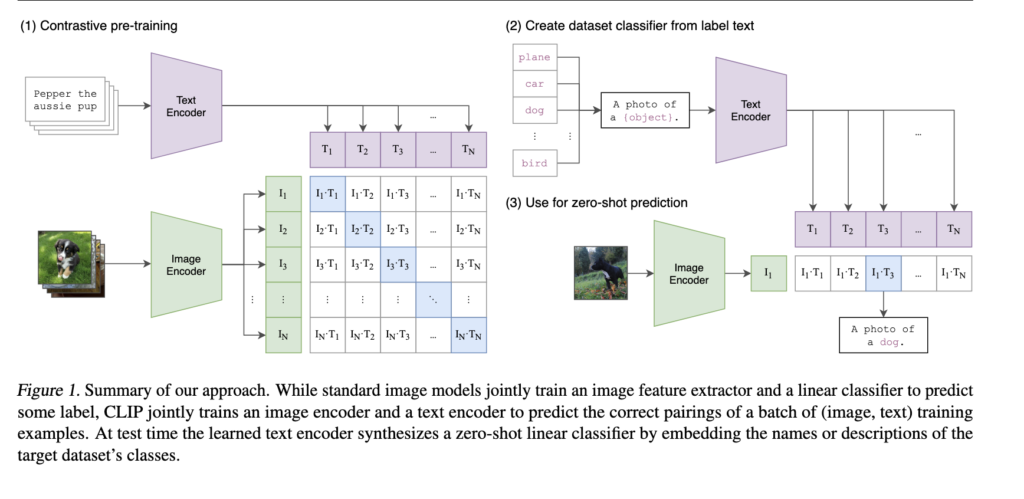

Using CLIP to Classify Images without any Labels | by Cameron R. Wolfe, Ph.D. | Towards Data Science

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

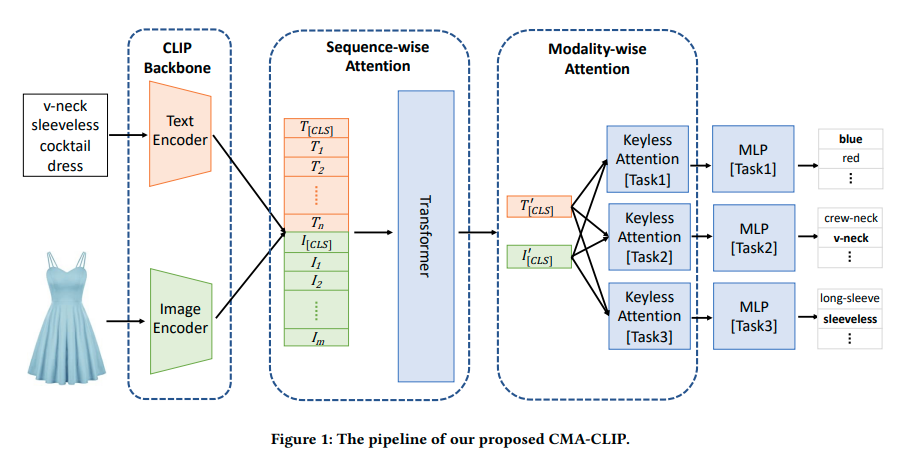

Architectural design of the CLIP-GLaSS framework for the text-to-image task | Download Scientific Diagram

This AI Research Unveils ComCLIP: A Training-Free Method in Compositional Image and Text Alignment - MarkTechPost

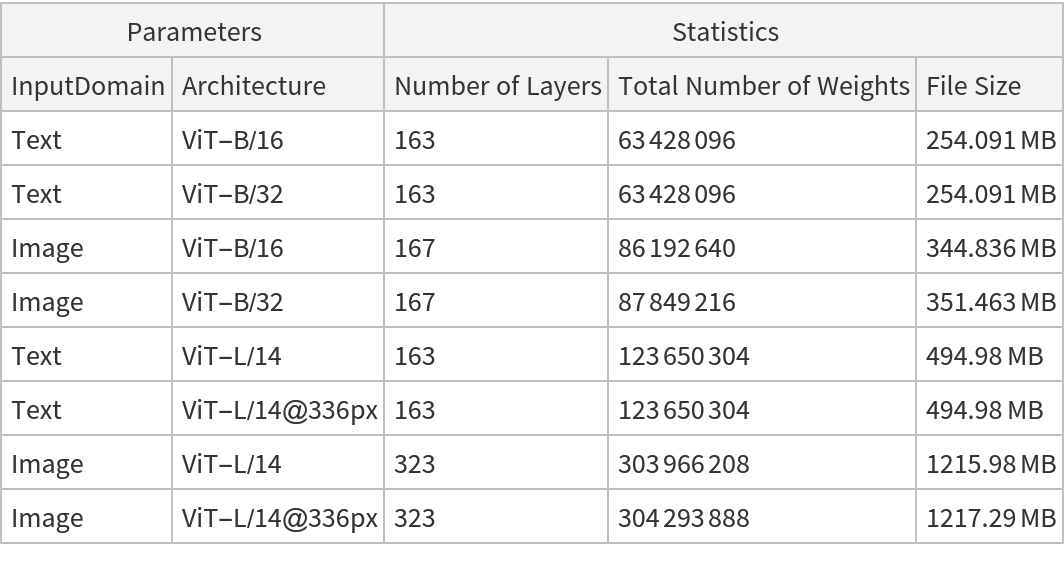

Rosanne Liu on X: "A quick thread on "How DALL-E 2, Imagen and Parti Architectures Differ" with breakdown into comparable modules, annotated with size 🧵 #dalle2 #imagen #parti * figures taken from

Using CLIP to Classify Images without any Labels | by Cameron R. Wolfe, Ph.D. | Towards Data Science

Build an image-to-text generative AI application using multimodality models on Amazon SageMaker | AWS Machine Learning Blog

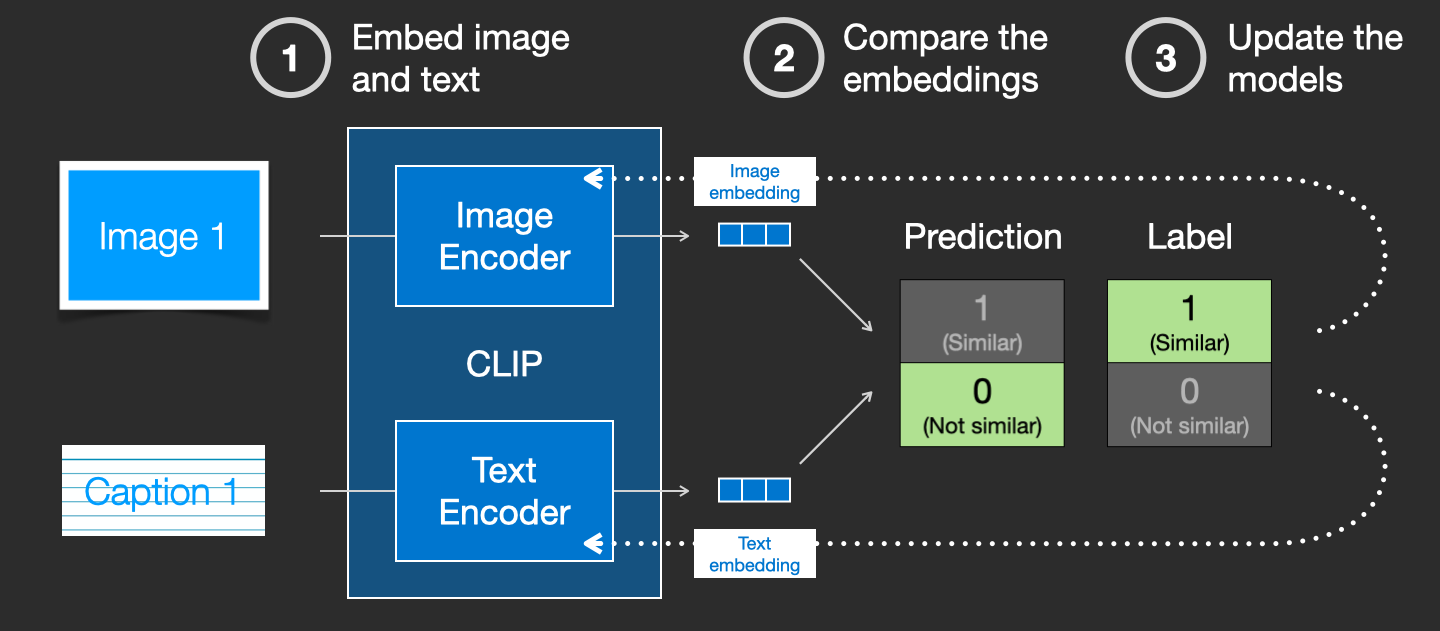

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium

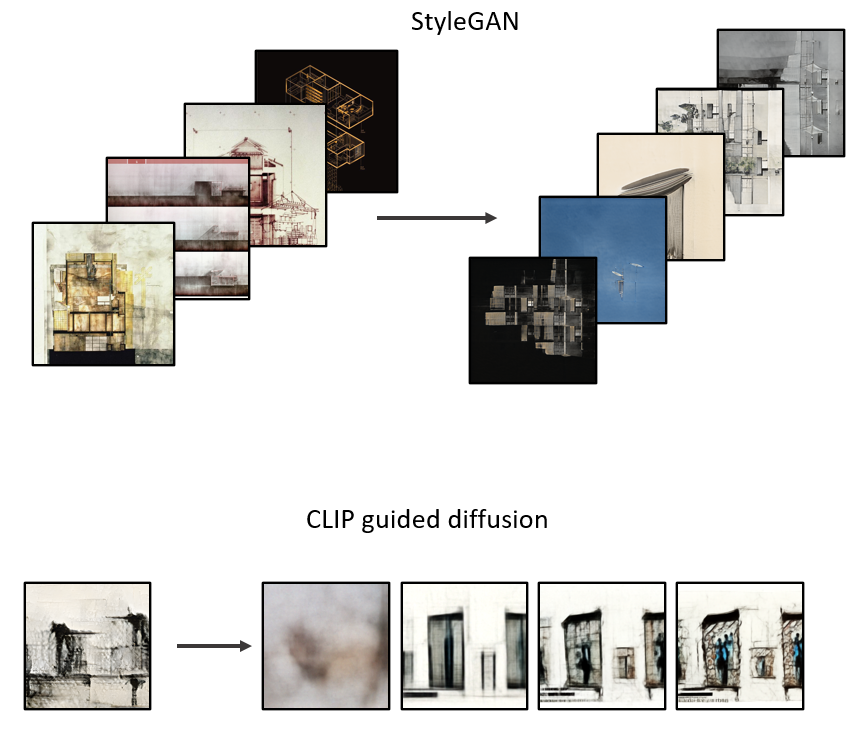

The Illustrated Stable Diffusion – Jay Alammar – Visualizing machine learning one concept at a time.