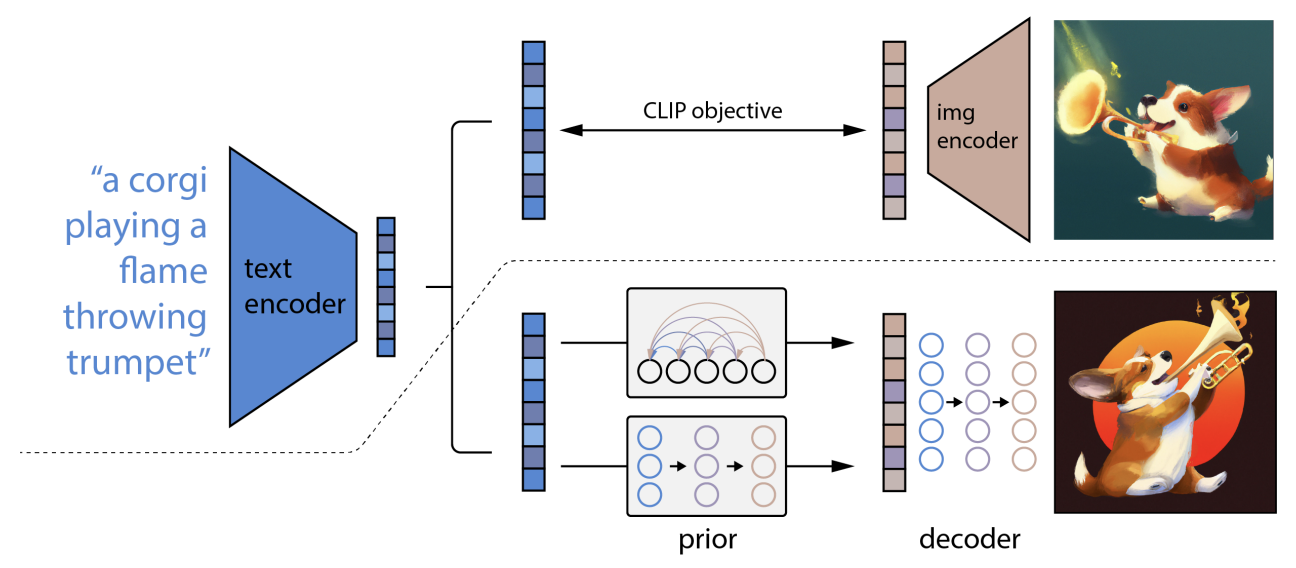

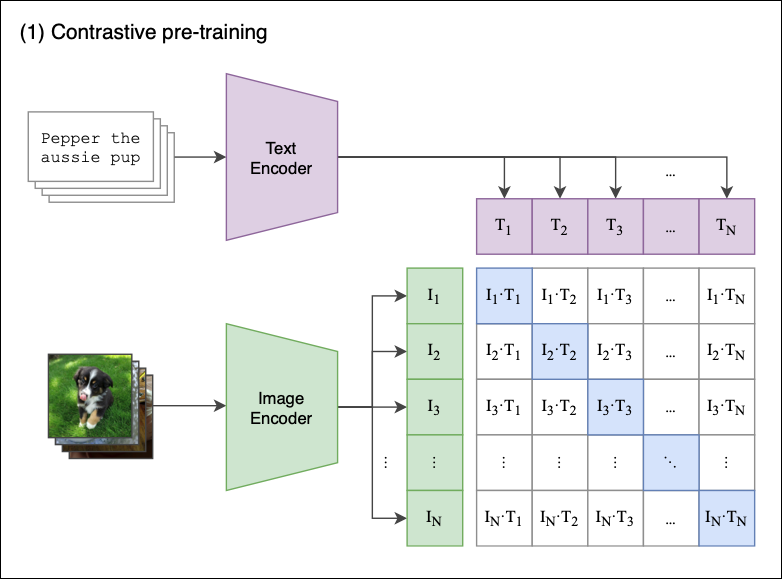

The CLIP Foundation Model. Paper Summary— Learning Transferable… | by Sascha Kirch | Towards Data Science

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

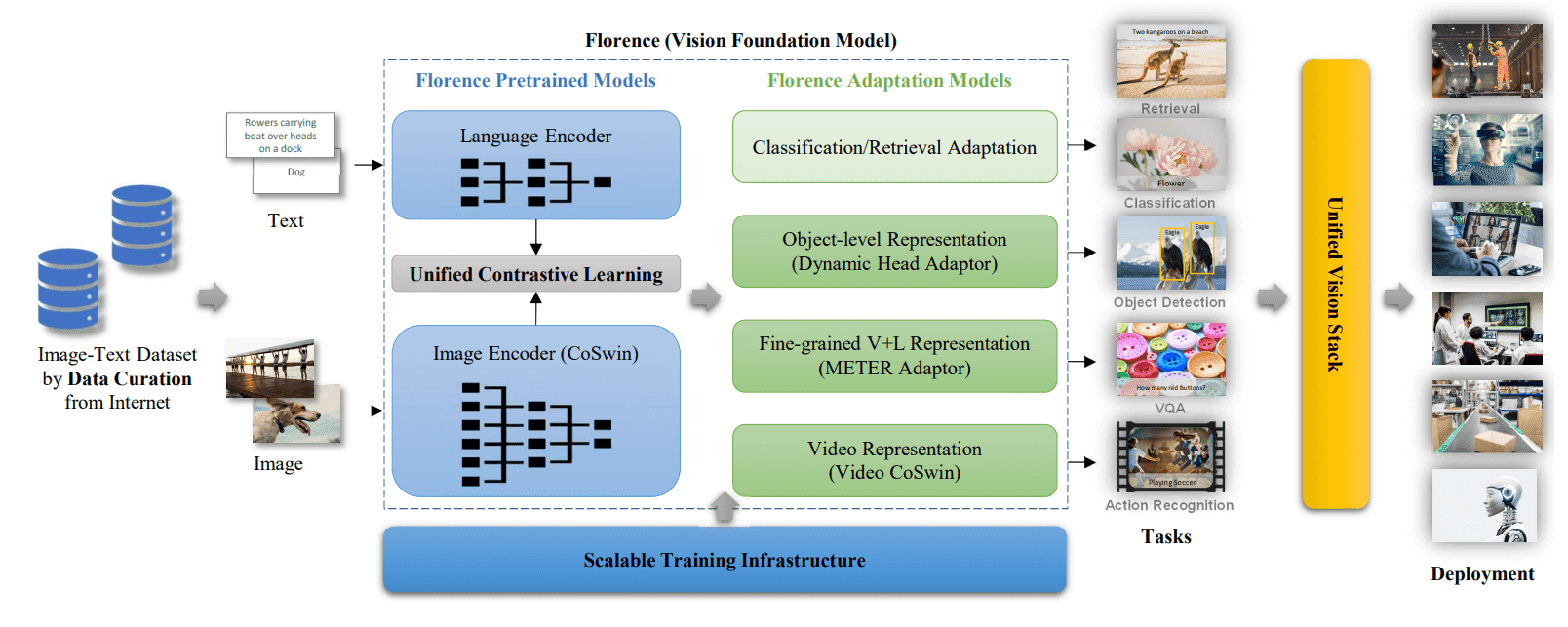

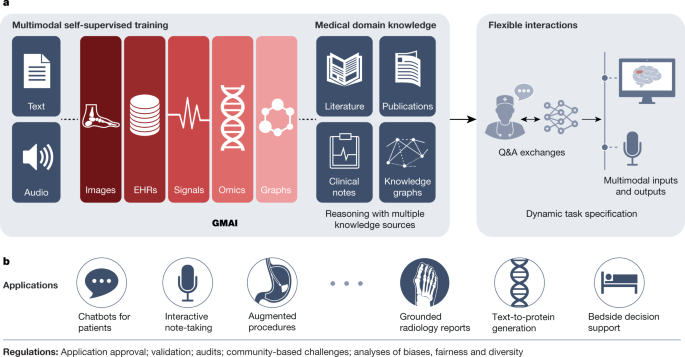

AI Foundation Model and Autonomous Driving Intelligent Computing Center Research Report, 2023 - ResearchInChina

Xiaolong Wang on X: "🏗️ Policy Adaptation from Foundation Model Feedback #CVPR2023 https://t.co/l1vSFtLdYq Instead of using foundation model as a pre-trained encoder (generator), we use it as a Teacher (discriminator) to tell

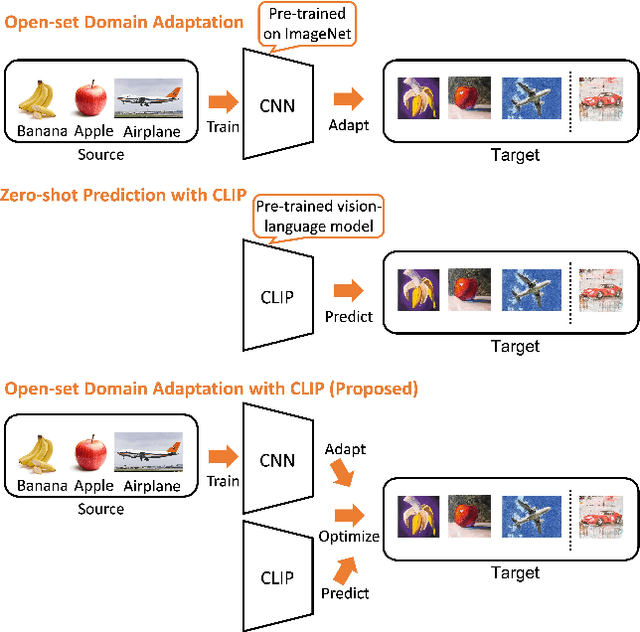

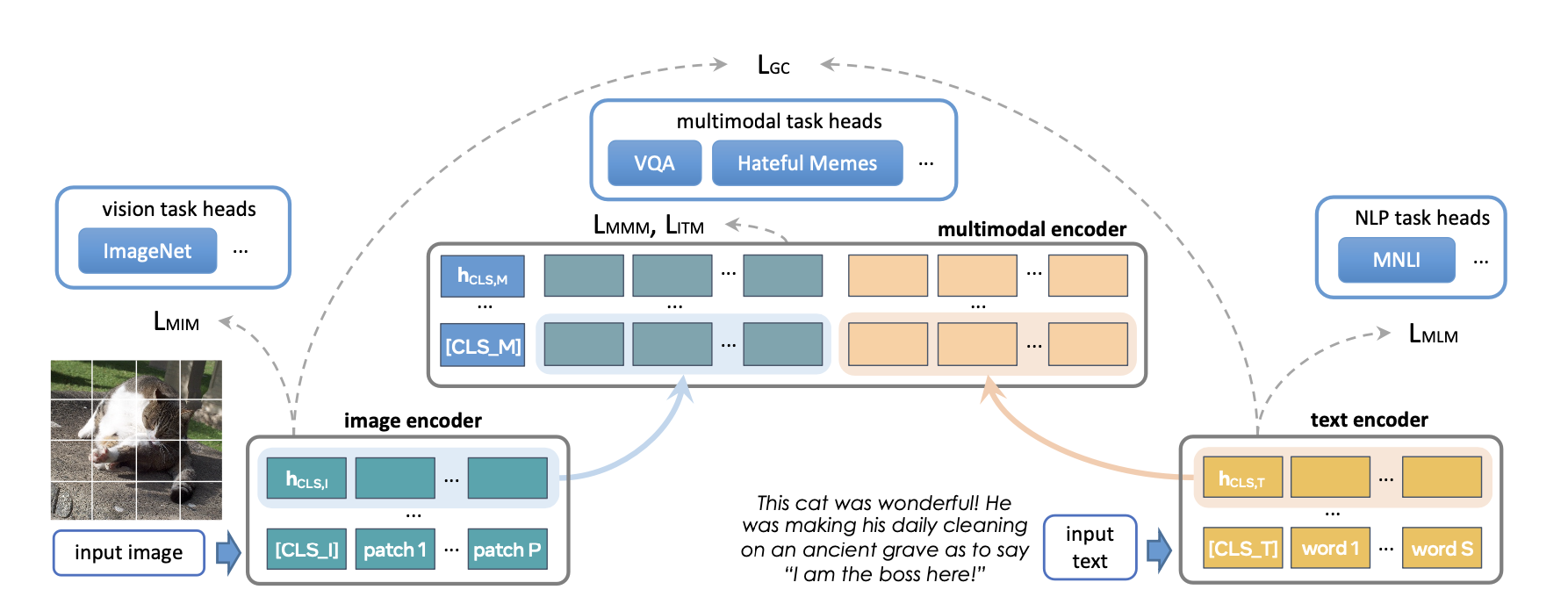

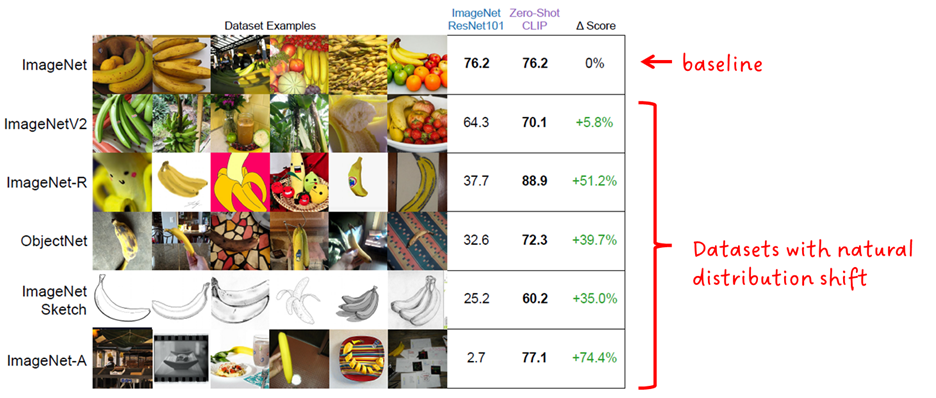

The CLIP Foundation Model. Paper Summary— Learning Transferable… | by Sascha Kirch | Towards Data Science

The CLIP Foundation Model. Paper Summary— Learning Transferable… | by Sascha Kirch | Towards Data Science

The CLIP Foundation Model. Paper Summary— Learning Transferable… | by Sascha Kirch | Towards Data Science

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

The CLIP Foundation Model. Paper Summary— Learning Transferable… | by Sascha Kirch | Towards Data Science

![AUTOML23] Open Foundation Models Reproducible Science of Transferable - YouTube AUTOML23] Open Foundation Models Reproducible Science of Transferable - YouTube](https://i.ytimg.com/vi/R6olskkLmjA/mqdefault.jpg)